A/B Testing for Landing Pages: Boost Conversions Effectively

So, what exactly is A/B testing when we talk about landing pages? At its core, it's a straightforward way to compare two versions of a page—an 'A' version and a 'B' version—to see which one actually gets more people to take action. You show each version to a different slice of your audience and let their real behavior tell you which headline, image, or call-to-action is the winner.

Why A/B Testing Is Your Most Powerful CRO Tool

Before you even think about changing a button color because you have a "good feeling" about it, let's talk about why methodical testing is the absolute bedrock of Conversion Rate Optimization (CRO). Relying on your intuition or just copying what a competitor is doing is like trying to navigate a new city without a map. Sure, you might get lucky, but you're far more likely to end up lost.

A/B testing is your map. It replaces pure guesswork with a data-backed system for figuring out what truly motivates your audience to click, sign up, or buy.

This process allows you to make small, incremental changes that, when tested and proven, can add up to huge lifts in your conversion rates over time.

The Foundation of Smart Optimization

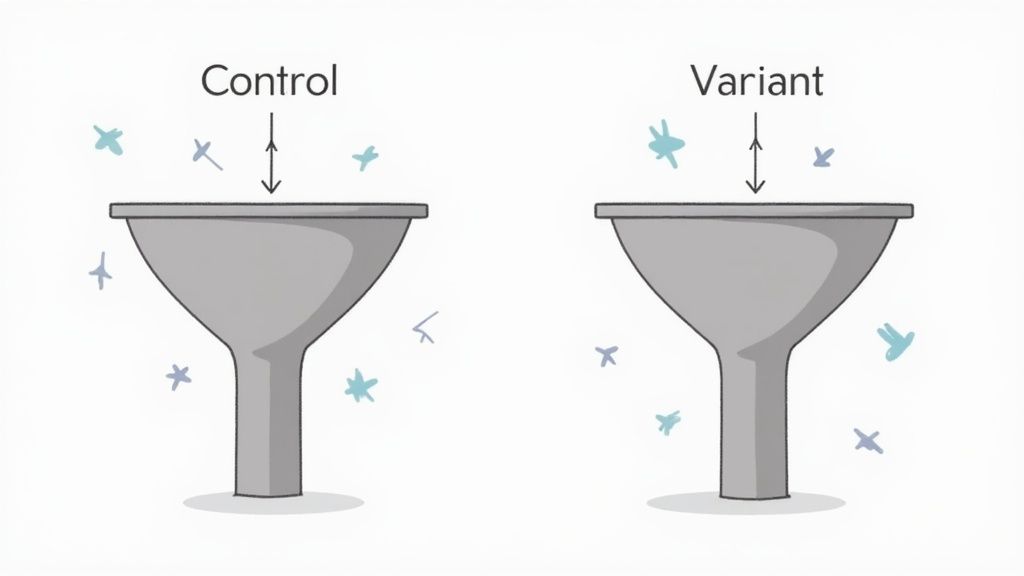

The idea is beautifully simple. You have your current page, the "control" (Version A). Then, you create a "challenger" (Version B) that has one specific change. By isolating that single variable, you can confidently attribute any difference in performance directly to that change.

Did changing your CTA from "Sign Up" to "Get Started Free" actually drive more demo requests? An A/B test gives you a clear, undeniable answer.

It's a proven method, which is why approximately 77% of businesses use A/B testing to sharpen their website's performance. But here's a dose of reality: it’s not a magic wand. Only about 1 in every 8 tests produces a statistically significant winner, which just highlights how crucial it is to be thoughtful and strategic. You can find more landing page performance stats in this report from Hostinger.

This data-driven discipline helps you build a high-performance conversion engine, turning your static landing pages into dynamic assets that are always getting better.

A/B Testing vs. Multivariate Testing

While A/B testing is your workhorse, it's good to know about its more complex cousin, multivariate testing (MVT). It's easy to get them mixed up, but they serve different purposes.

- A/B testing is perfect for testing one big, impactful change against your control. Think: a completely new headline or a different hero image.

- Multivariate testing is for testing multiple, smaller changes all at once to see how different combinations of elements perform. For example, you could test two headlines, two images, and two CTA buttons simultaneously to find the single best combination.

Key Takeaway: A/B testing is your go-to for focused experiments, especially if you have lower traffic, because it gets you to a conclusion faster. MVT is a powerful tool for high-traffic sites looking to fine-tune and find the optimal combination of several page elements.

Which method is right for you? It really boils down to your traffic volume and your testing goals.

Choosing Your Testing Method: A/B vs. Multivariate

Here’s a quick comparison to help you decide which testing approach best fits your landing page optimization goals and traffic levels.

| Aspect | A/B Testing | Multivariate Testing (MVT) |

|---|---|---|

| Goal | To determine the better-performing version between two distinct options (A vs. B). | To find the optimal combination of multiple elements on a single page. |

| Complexity | Simple to set up and analyze. | More complex; tests multiple variations of several elements simultaneously. |

| Traffic Needs | Works well with moderate traffic levels. | Requires significant traffic to achieve statistically significant results for all combinations. |

| Best For | Testing high-impact, single-element changes like headlines, CTAs, or hero images. | Optimizing multiple, smaller elements on a page like form fields, button colors, and body copy. |

| Insights | Provides a clear "winner" and "loser" for a specific change. | Reveals which combination of elements performs best and how elements interact with each other. |

For most teams just dipping their toes into landing page optimization, A/B testing is the way to go. It offers a clearer, more direct path to actionable insights, letting you learn quickly and build momentum with every successful experiment you run.

How to Form a Hypothesis That Gets Results

Every powerful A/B test I've ever seen started with a smart question, not just a random idea. Too many people jump in thinking, "Let's test the CTA button!" That’s not a plan; it's a recipe for confusing results because it has no real direction.

A strong, testable hypothesis is the absolute foundation of any successful experiment. It’s what turns your vague hunches into a clear, measurable prediction. This isn't about guessing what might work better. It’s about building an educated prediction based on real user behavior and cold, hard data.

Instead of just throwing spaghetti at the wall to see what sticks, you're making a calculated move designed to fix a specific problem you've already found on your landing page. This disciplined approach is what separates random tweaks from strategic, repeatable wins.

Grounding Your Hypothesis in Real Data

To build a hypothesis that actually has a fighting chance, you have to become a detective. Your first job is to hunt for clues that show you where your users are getting stuck, confused, or just plain frustrated. That means digging into both quantitative and qualitative data.

Start with the numbers. Analytics can tell you what is happening. Where are people dropping off in your funnel? Are they ditching your sign-up form after filling out just one field?

But analytics rarely tells you why. That's where the qualitative tools come in. They give you the human context behind all those data points.

- Heatmaps and Scroll Maps: These visually show you where people are clicking—or trying to click on things that aren't even links. A heatmap might reveal that everyone is completely ignoring your primary call-to-action.

- Session Recordings: Watching anonymized recordings of real user sessions is like looking right over their shoulder. You can see exactly where they hesitate, where their mouse movements show confusion, or where they start rage-clicking in pure frustration.

- User Surveys and Feedback Forms: Sometimes, the easiest way to figure out what's wrong is just to ask. A simple pop-up survey asking, "What's stopping you from signing up today?" can give you incredibly direct and valuable insights.

By combining these sources, you can zero in on specific friction points. For instance, a heatmap might show zero clicks on your features section, and a session recording could confirm that users scroll right past it. Boom. You've just identified a problem: your features aren't compelling enough.

Crafting a Clear and Testable Statement

Once you've found a problem backed by data, you can build a proper hypothesis. A solid hypothesis isn't just a simple prediction. It needs three key parts: a proposed change, an expected outcome, and the reasoning behind it.

I always use this simple framework to keep things sharp and focused:

If we [make this specific change], then [this specific metric will improve] because [this is the reason why we think it will work].

This structure forces you to be specific and connect your proposed solution directly to user behavior. It turns a fuzzy idea into a scientific statement you can actually prove or disprove.

Let’s walk through a real-world example. Say you noticed from session recordings that users are hesitating before clicking a "Submit" button on your demo request form. That generic word creates uncertainty—what am I submitting to?

- Weak Idea: "Let's test the CTA button text." (Too vague!)

- Strong Hypothesis: "If we change the CTA button text from 'Submit' to 'Get My Free Demo,' then we expect to see a 15% increase in form completions because the new copy clarifies the value and reduces anxiety about what happens next."

See the difference? The strong hypothesis is specific, measurable, and gives a clear "why." This clarity is absolutely vital for a b testing for landing pages. Whether your test wins or loses, you learn something valuable about your audience that you can apply to every experiment you run from now on.

Setting Up a Clean and Effective Landing Page Test

Okay, you've got a solid hypothesis. Now it's time to move from theory to action. This is where you actually build the experiment, create your landing page variations, and get the test live—without tripping over the common technical traps that can poison your data. Getting this part right isn't just important; it's non-negotiable if you want a clean, conclusive result.

The guiding principle here is isolation. To really understand what makes your users tick, you have to test one specific element at a time. If you change the headline, the hero image, and the CTA button all at once, you might see a lift in conversions, but you’ll have zero idea which change actually did the heavy lifting. Was it the slick new headline or that bright orange button? You'll never know.

By isolating a single variable—say, just your headline—you can confidently pin any difference in performance directly on that one change. This methodical approach is the absolute backbone of effective a b testing for landing pages.

Designing Your Challenger Page

Your new variation, often called the "challenger," has to walk a fine line. It needs to be different enough from your current page (the "control") to actually have a shot at changing user behavior in a meaningful way. A super tiny change, like tweaking one word in a long paragraph, is pretty unlikely to produce a statistically significant result.

At the same time, your challenger has to maintain brand consistency. The design, tone, and overall vibe should still feel like you. A drastic change in fonts and colors can easily confuse visitors and pollute your data, because their reaction might be to the jarring design shift rather than the specific element you’re trying to test.

Pro Tip: When designing your challenger, go for a bold but focused change that directly addresses your hypothesis. If you believe a benefit-driven headline will beat a feature-focused one, make that new headline impossible to miss. Don't muddy the waters by also tweaking the sub-headline and body copy.

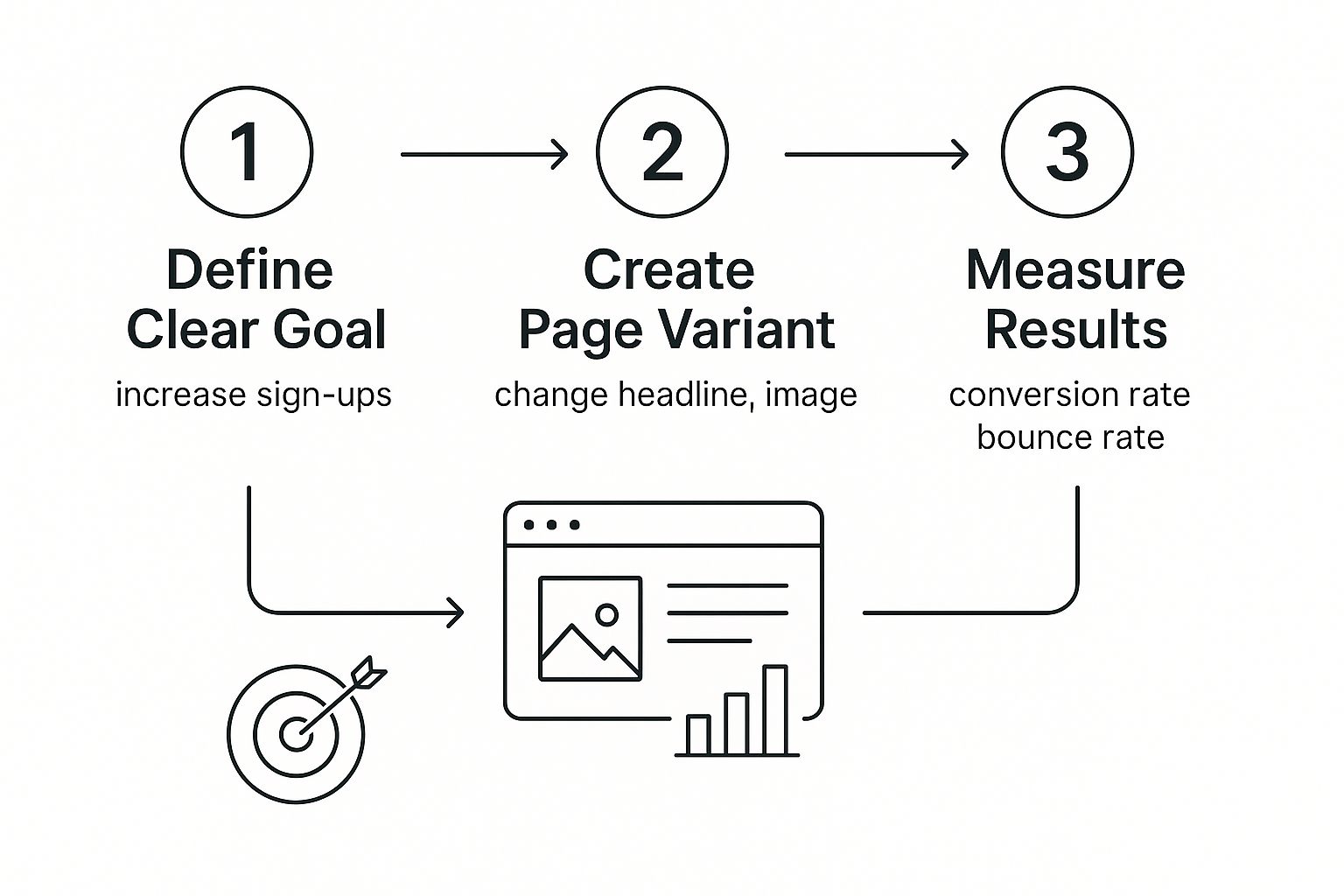

The whole process boils down to a simple but powerful cycle: define your goal, create a focused variant, and measure what happens.

This flow shows the bare essentials of a clean A/B test. You have to clearly define what success looks like, create a variation that hones in on a single idea, and then measure the outcome.

Stick to this simple workflow, and you'll get an experiment structured to deliver clear, actionable insights instead of just a pile of ambiguous data.

Navigating the Technical Setup

With your designs locked in, it’s time for the technical part. This is where so many well-intentioned tests go off the rails because of small setup mistakes. Using dedicated A/B testing software makes this a thousand times easier, and it's a popular move for a good reason. In fact, about 44% of companies use specialized tools for A/B testing. It helps them pinpoint what works and ensures they spend their marketing budget on proven creatives instead of just guessing. You can find more great insights on landing page optimization over at Contentful.com.

Before you hit that launch button, run through this pre-flight checklist. It'll help you dodge the most common mistakes.

- Define Your Primary Conversion Goal: What’s the one action that signals a "win"? Is it a form submission, a button click, or a demo booking? Define this single goal clearly inside your testing software. Everything will be measured against this primary metric.

- Set Up Secondary Goals: While you have one main goal, tracking secondary metrics like scroll depth or time on page can give you some amazing context, especially if the primary result is too close to call.

- Ensure Correct Traffic Allocation: Most tools will default to a 50/50 split, sending half your audience to the control and the other half to the challenger. Just double-check that this is active to keep your sample unbiased.

- Check for Cross-Device Consistency: This is a big one. Preview both of your variations on desktop, tablet, and mobile. A challenger that looks incredible on a big screen but is completely broken on a phone will tank your test results.

- Verify Tracking Pixels and Scripts: Make sure your analytics and tracking codes (like Google Analytics or a marketing automation pixel) are installed correctly on both the control and the challenger. Forgetting to add them to the new page is a classic, costly error.

Taking a few extra minutes to be meticulous about your setup can save you from weeks of collecting totally worthless data. A clean setup is the only way to get results you can actually trust and act on with confidence.

Running Your Test and Tracking the Right Metrics

Launching the experiment is the easy part. The real discipline in a b testing for landing pages is knowing when to call it. The temptation to peek at your results after a few hours and declare a winner is huge, but it’s also the fastest way to get junk data. A successful test isn’t a sprint—it’s a marathon paced by statistical confidence.

You’ve already done the hard work of building a solid hypothesis and setting up a clean test. Now it’s about having the patience to let the experiment run its course and gather enough data to make a smart call. That means no knee-jerk reactions. Trust the process.

Beyond the Primary Conversion Goal

Your main conversion goal—a form fill, a purchase, whatever—is the headline act. But it doesn't tell the whole story. The best optimizers look at a whole host of metrics to understand why one version is winning. This is where secondary metrics come in, providing critical context about what users are actually doing.

Imagine your new challenger page has the exact same conversion rate as your control. Looks like a tie, right? Not so fast. If you dig a little deeper and see the challenger also has a 25% lower bounce rate and people are spending 40 seconds longer on the page, that’s not a tie at all. It's a clear signal that your new version is way more engaging, and that's a huge win to build on for your next test.

Key secondary metrics you should be watching include:

- Bounce Rate: What percentage of visitors bail after seeing just one page? A lower bounce rate on your challenger means the message is hitting home.

- Time on Page: How long are people sticking around? More time usually points to deeper engagement with your copy and offer.

- Scroll Depth: How far down the page are people actually getting? This is pure gold for long-form landing pages, telling you if your new copy is keeping them hooked.

- Click-Through Rate on Secondary Elements: Are more people clicking that "learn more" link or watching your video on one version over the other?

Tracking these data points gives you a much richer picture of user behavior, turning even an "inconclusive" test into a valuable learning experience.

Reaching Statistical Significance

The single most critical concept in running a legit A/B test is statistical significance. Put simply, this is how confident you can be that your results aren't just a random fluke. The industry standard is to aim for a 95% confidence level. This means you can be 95% certain that the difference you're seeing between your variations is real.

Crucial Insight: Ending a test after just a couple of days, even if one version is ahead by 30%, is a massive mistake. Early results are notoriously volatile and often lead to false positives. You must collect enough data over a sufficient period to let the numbers stabilize.

Before you even think about launching, use an online A/B test sample size calculator. You’ll plug in your page’s current conversion rate and the minimum improvement you're hoping to detect. The calculator will spit out the number of visitors you need for each variation to hit that 95% confidence level. This takes all the guesswork out of it and gives you a clear finish line.

Respecting the Business Cycle

Traffic is never consistent. User behavior on a Monday morning is completely different from a Saturday night. A B2B SaaS product will likely see most of its action during the 9-to-5 grind, while an e-commerce store might see its traffic spike in the evenings and on weekends.

Because of this, you should always run your test for at least one full business cycle—usually a full week, sometimes two. This ensures you capture the natural highs and lows of user behavior so your data isn't skewed by a weekend slump or a weekday rush.

On top of that, you have to be aware of outside events that can contaminate your results.

- Are you running a huge sale that's driving a ton of unusual traffic?

- Is it a major holiday that could mess with normal buying habits?

- Did an influencer just give you a shoutout, causing a massive traffic spike?

If any of these things happen, you might want to pause the test or just let it run longer to water down the impact of the anomaly. Clean data is the bedrock of a trustworthy result, and protecting the integrity of your experiment is everything.

Analyzing Your Results and Making Smart Decisions

https://www.youtube.com/embed/hEzpiDuYFoE

Alright, the test has run its course and the data is in. This is where the real work begins—transforming a spreadsheet of raw numbers into actual business intelligence.

It's the moment of truth where you separate what you thought your audience wanted from what their actions proved they actually respond to. Honestly, interpreting the results correctly is every bit as important as designing a solid test in the first place.

Your testing tool will spit out a report, and the big numbers—like the conversion rate—are easy to find. But the real story is usually hiding in the details. Before you pop the champagne and declare a winner, you need to get comfortable with two critical concepts that tell you if your results are legit or just random noise.

Understanding the Numbers That Matter

First up is statistical confidence, which we touched on earlier. Hitting a 95% confidence level is the industry standard. It basically means there's only a 5% chance that the difference you saw between the pages was a fluke. This is your green light to trust the result and act on it.

The second concept is the margin of error. This number gives you the likely range of the true conversion rate. For example, if your new page shows a 10% conversion rate with a ±2% margin of error, its real performance is probably somewhere between 8% and 12%. Knowing this range keeps you from overreacting to tiny differences that might not mean anything.

Key Takeaway: A test result isn't just a single number. It's a conclusion backed by statistical confidence. If you have a clear winner with 95% confidence, you can move forward decisively. Anything less, and you’re still making an educated guess.

What to Do with a Clear Winner

When you get a statistically significant winner, take a moment to celebrate! You’ve successfully found a better way to connect with your audience. But don’t just move on; you have to make sure you deploy the winning version correctly.

Inside your testing platform, you’ll have an option to formally "Choose as winner." Clicking this usually triggers two important actions:

- It immediately starts sending 100% of future traffic to the winning variation.

- It archives the losing version, taking it out of rotation but saving the data for your records.

This process ensures everyone sees the best-performing page from that point forward and prevents any old versions from accidentally showing up again. Once you’ve done this, the test is officially over and the improvement is locked in.

The Inconclusive Test Is Not a Failure

So what happens when the test ends and... nothing? The conversion rates are nearly identical and the confidence level is hovering in the low-confidence zone. This is not a failure. It’s actually a powerful learning opportunity.

An inconclusive result tells you something incredibly valuable: your change wasn't impactful enough to sway user behavior.

This is your cue to dig deeper into the secondary metrics. Did one version have a much lower bounce rate? Did people on the challenger page spend more time reading or scroll further down? These behavioral clues are gold. They might suggest your new messaging was more engaging, even if it didn't directly lead to more conversions right away.

Use that information to form a bolder, more informed hypothesis for your next experiment. Maybe that headline change was just too subtle. Your next test could involve a complete rewrite of the value proposition, armed with the engagement data you just collected.

Build Your Library of Knowledge

Finally, every single test—whether it’s a win, a loss, or a tie—needs to be documented. Create a simple spreadsheet or a shared document that serves as your testing archive.

For each experiment, you should log:

- Hypothesis: What did you test and what did you expect to happen?

- Screenshots: A quick visual of the control and the variation(s).

- Results: The key data points, especially confidence level and conversion lift.

- Insights: A single sentence summarizing the main takeaway. What did you learn?

Over time, this archive becomes your company’s institutional memory. It stops you from rerunning tests that have already failed and helps you spot powerful patterns in user behavior. This library of insights is what makes every future test smarter, ensuring your efforts in a b testing for landing pages compound into significant, long-term growth.

Got Questions About Landing Page A/B Testing?

Even with a solid plan, you’re going to have questions pop up when you're in the trenches of A/B testing your landing pages. Getting a handle on these common issues ahead of time will help you dodge pitfalls, test with more confidence, and squeeze more value out of every single experiment.

Let's dive into some of the questions I hear most often from teams just getting started.

How Much Traffic Do I Really Need for an A/B Test?

I wish there was a magic number, but the truth is, it depends. The traffic you need is tied directly to your page's current conversion rate and how big of a lift you’re expecting from your change. A page with a really low conversion rate is going to need a ton more traffic to get a reliable result.

As a rough starting point, try to aim for at least 1,000 visitors and 100 conversions for each version of your page. But before you even think about launching, plug your numbers into a sample size calculator. It'll give you a solid estimate of how long your test needs to run to hit a 95% confidence level—that’s the industry standard for trusting your data.

My Take: Kicking off a test without enough traffic is the fastest way to get misleading results. It’s always better to hold off for a high-impact idea than to run a weak test on a low-traffic page that can't give you a clear winner.

What Are the Best Things to Test on a Landing Page?

When you’re just starting out, you want to go for the big wins. Focus on the elements that have a direct line to a user's decision-making process. This is where you’ll find the best opportunities for a significant lift right out of the gate.

Your first few tests should zero in on these key players:

- The Headline: This is your first impression. Try pitting a benefit-driven headline against one that's more focused on features.

- The Call-to-Action (CTA): Experiment with everything. The button copy ("Get Started" vs. "Claim Your Free Trial"), the color, the size, and even where it sits on the page can make a huge difference.

- Hero Image or Video: Does a slick product shot work better than a photo of a happy customer? What happens if you swap that static image for a short demo video?

- Form Fields: How long is your form? You might be surprised by the lift you see just by removing one optional field.

- Social Proof: Test out different testimonials, customer logos, or hard numbers (like "Trusted by 10,000+ teams") to figure out what builds the most trust with your audience.

The golden rule here is to test one thing at a time. If you change the headline and the button color, you’ll have no idea which change actually caused the shift in conversions. Keep it clean.

How Long Should I Let an A/B Test Run?

The right duration comes down to two things: reaching statistical significance and capturing a full user cycle. Whatever you do, never stop a test early just because one variation shoots ahead in the first couple of days. Early results are notoriously shaky and a classic recipe for a false positive.

A good rule of thumb is to let your test run for at least one to two full weeks. This helps smooth out any weird fluctuations between weekday and weekend traffic, giving you a more realistic picture of user behavior.

Your main goal is to hit the sample size you calculated from the start and reach a statistical confidence of 95% or higher. Be patient. Let the data do the talking until you have a result you can bet on.

What if My A/B Test Results Are Inconclusive?

First off, an inconclusive result isn't a failure—it's feedback. It’s your audience telling you that the change you made wasn't big enough to move the needle on their behavior, and that’s a valuable lesson.

Before you do anything else, double-check that you ran the test long enough to get enough data. If your sample size was solid, an inconclusive result might just mean your visitors don't really care if your button is green or blue.

This is a great time to dig into your secondary metrics. Did one version tank the bounce rate, even if it didn't lift conversions? These little clues can help you shape a bolder, more informed hypothesis for your next experiment. Sometimes, learning what doesn't work is just as important as finding a clear winner.

Want to see what the most successful SaaS companies are doing on their landing pages? Pages.Report gives you access to insights from over 368 proven products. Stop guessing and start learning from the best at https://pages.report.